🧭 Introduction: The Imperative of Build and Release Management

In the rapidly evolving landscape of software development, efficient build and release management has become a cornerstone of successful DevOps practices. By automating and streamlining the processes of building, testing, and deploying applications, organizations can achieve faster release cycles, improved software quality, and enhanced collaboration between development and operations teams.

🧱 Understanding the Build and Release Management Maturity Model

The maturity of build and release management practices can be assessed through a multi-level model:

-

Level 1: Manual Processes

Builds and deployments are performed manually, often leading to inconsistencies and errors. -

Level 2: Scripted Automation

Basic scripts automate some aspects of the build and deployment process, reducing manual effort but lacking integration and scalability. -

Level 3: Continuous Integration (CI)

Automated tools continuously integrate code changes, run tests, and generate build artifacts, enhancing code quality and early defect detection. -

Level 4: Continuous Delivery (CD)

Automated pipelines enable the deployment of applications to staging environments, with manual approvals for production releases. -

Level 5: Continuous Deployment

Fully automated pipelines deploy applications to production environments without manual intervention, ensuring rapid and reliable releases.

🛠️ Key Tools Facilitating Build and Release Management

Several tools have become integral to achieving build and release management maturity:

-

Jenkins: An open-source automation server that supports building, testing, and deploying applications.

-

GitHub Actions: A CI/CD platform that allows automation of workflows directly from GitHub repositories.Wikipedia+1Wikipedia+1

-

GitLab CI/CD: An integrated CI/CD tool within GitLab that automates the software delivery process.Spacelift+1Reddit+1

-

Azure Pipelines: A cloud-based CI/CD service that supports building, testing, and deploying applications to various platforms.

-

Argo CD: A declarative, GitOps continuous delivery tool for Kubernetes that synchronizes application state with Git repositories.

📈 Case Study: Enhancing Build and Release Management in a Tech Company

A leading technology company faced challenges with slow release cycles and frequent deployment errors. By implementing Jenkins for automated builds and tests, and Argo CD for continuous delivery, they achieved:

-

50% Reduction in Deployment Time: Automated pipelines accelerated the release process.

-

30% Decrease in Deployment Failures: Early detection of issues through automated testing improved reliability.

-

Enhanced Collaboration: Integration of tools facilitated better communication between development and operations teams.

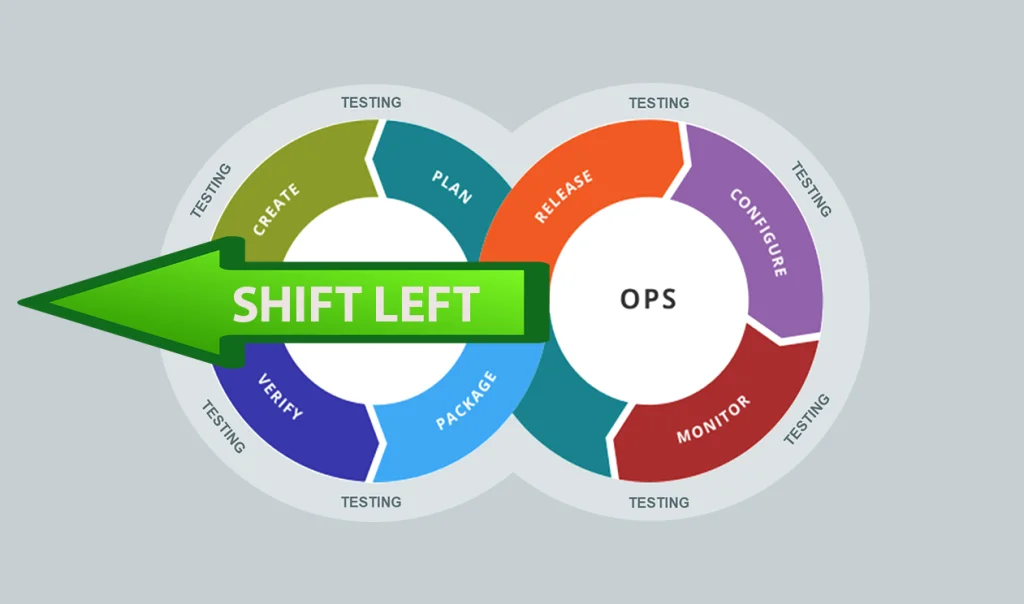

📊 Visualizing the Continuous Delivery Pipeline

This diagram illustrates the stages of a continuous delivery pipeline, from code commit to deployment, highlighting the automation and integration at each step.

📚 Conclusion: Embracing Build and Release Management for DevOps Success

Effective build and release management is essential for organizations aiming to achieve DevOps maturity. By adopting automated tools and practices, teams can enhance software quality, accelerate release cycles, and foster a culture of continuous improvement.

📅 Next in the Series:

“DevSecOps in Practice: Embedding Security into Every Commit”